I’m London based and create my own proxies using Three UK. I use RPI + Huawei Dongle. With a bit of effort anyone can do it. But in case if you want to buy instead then this:

Or simply google mobile proxy sellers. I can’t say for other countries, but UK Three - when it changes IP it bounces all across the country, meaning it doesn’t matter where you’re from you’ll ‘appear’ to be anywhere in the country at random.

I suspect there’s some connection to the nearest tower itself, so bigger city density may have benefit over a smaller city, but I was not able to figure this out so can’t confirm or deny, maybe someone who has an entire network of proxies can clear it up.

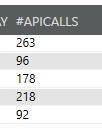

Also, got a few people asking for my settings. There’s no secret here. 300-700 (500 average) API calls before they die is not a lot sadly, I wish I could reach higher numbers. That’s why I’ve been trying to do 100d/30h test, no-tag-name method test and pushing to the max within a day test. All so far result in the same API calls before it’s burned.

Just run your scrapers on 3 shifts, make sure they won’t overlap and spread out the actions a lot. if you’re doing 100-300 API calls per day, your scraper won’t have more than 2-4 active sessions per day. Meaning you could potentially do a lot more than 50 scrapers if your proxy rotates every 5min.

Think about it this way - 1440 min (1 day) / 5 = 288 - Unique IP’s, Locations & sessions.

Each session should be safe to handle 5 concurrent connections (actually more but let’s say 5 to be safe). So, in theory, you could run 288 * 5 = 1440 sessions per day safely.

So finally, 1440 sessions / 4 (average connections on acc before your API ceiling is reached) = 360 potential accounts running safely on 1 mobile proxy.

Yes, this is crazy numbers lol. I wouldn’t even go past 100 acc, but 50 is safe imo as long as you spread out your settings enough.

Ofc don’t be stupid, don’t log them all in at once and make sure you don’t have all the sessions overlapping at once, which will burn the accs. This bit takes a bit of practise and effort but 100% achievable with mp settings.