There is a new box to check called USE ONLY EB FOR ALL ACTIONS, I checked it aswell, unfortunatly we still cant login using eb and have to login trought API and only when you turn a tool on it’ll login to EB, but after that we can check this box and it w’ont touch the api at all. You think it’ll avoid blocks and AC’s ?

I think this new setting is there for a reason with that said, yes, it should avoid specially CA’s.

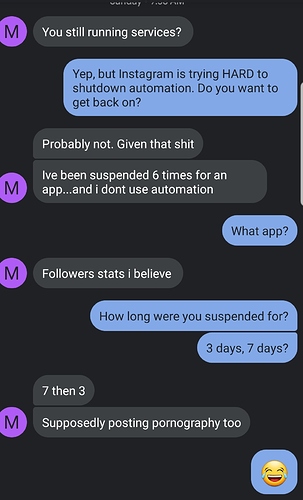

I’m sure multiple people have posted similar comments, but this is a conversation I had with a former client two days ago. He’s getting more temp/hard blocks using an app (from the Google play store) than I am automating. Food for thought…

just wanted to say thank you for all of your help and updates!

Anyone tried this function USE ONLY THE EMBEDDED BROWSER(do not use the api)?

After I turn it on, the account could not find any sources to follow

You need a scrapper account to feed the only EB account. Scrapping is done via API that’s why.

Aha!! Understand now!! Thanks

What do you mean, “Eb + api”?

eb = Activity

api = Scrap

Api->Source only?

What does “only if you send users from other accounts” mean?

You need to use other account to do the scraping and send the results to the only eb account to follow. All scraping can only be done by api

So how long should the tool needs to wait before continue doing follow? You mentioned 3H isnt enough causing AC.

How do you do this?

Oh wow so you’re saying that when we do actions (for eg. follow) on EB its been going like…

API - scrape

EB - follow

back to API - scrape

EB - follow

back to API - scrape

EB - follow ?

Damn I had no idea.

check here

Is a scraper account able to scrape more actions than if it were doing follow actions without blocks?

I mean i could set up 1 (slave) scraper to feed for example enough results 20 or more (slave) accounts?

don’t it get blocked also to only follow via lists ? instead of scrapping yourself ?

List you mean the modal? Follow just by clicking downwards?

Depend of the sources on there but yes indeed, you can perfectly do that but I would advice you to use more scrapers to feed those slaves if there is not many sources of course.

But essentially it could scrape 24/7 preparing for sources and making sure the actual slaves dont get stuck in the search abort thing?

Only thing you’d need to do is feed the scraper with thousands or millions of sources?